Monitoring and Logging with Prometheus: A Practical Guide

Monitoring and logging are critical components of any robust IT infrastructure. They ensure that systems run smoothly, issues are detected early, and performance is optimized. Prometheus has emerged as a popular tool for these tasks, offering powerful features for metrics collection, querying, and alerting. In this guide, we'll explore how to effectively use Prometheus for monitoring and logging, providing a practical, hands-on approach to get you started. Key topics include Prometheus architecture, installation, setup, metric collection, alerting, and integration with other tools.

If you find yourself curious about who is utilizing Prometheus globally, here is a brief list of some notable companies that have adopted Prometheus for their needs: JPMorgan, Dice, Garfana Labs, Visa, more ...

What is Prometheus? Understanding the Basics

Prometheus is an open-source monitoring and alerting toolkit designed specifically for reliability and scalability. Originally developed at SoundCloud, Prometheus is now a part of the Cloud Native Computing Foundation (CNCF). This section will delve into Prometheus main concepts.

Node exporter

is a component or service that collects metrics from third-party systems or applications (such as databases, web servers, or hardware devices) and exposes them in a format that Prometheus can scrape. Exporters are commonly used for systems that don't have native Prometheus metric exposure.

Example of node exporter could be:

- The Node Exporter is used to expose system metrics (e.g., CPU, memory, disk usage) from Linux systems.

- The MySQL Exporter collects MySQL database metrics and makes them available for Prometheus to scrape.

Service discovery

is a mechanism in Prometheus that automatically detects and configures scrape targets (applications, services, or exporters) without manual intervention. Instead of manually configuring each target, Prometheus can discover them through integrations with various platforms, such as Kubernetes, AWS EC2, or Consul. Service discovery allows Prometheus to keep its list of scrape targets up to date, even as services are added or removed dynamically. As an example, in Kubernetes, Prometheus can automatically discover and monitor new pods as they are deployed.

Scraping

refers to the process by which Prometheus collects metrics from monitored services or systems. Prometheus periodically makes HTTP requests to endpoints that expose metrics, retrieves the data, and stores it in its time-series database. The frequency of scraping and the list of targets are defined in the Prometheus configuration. As an example Prometheus might scrape the /metrics endpoint of a web server every 15 seconds to collect HTTP request statistics.

PromQL (Prometheus Query Language)

is the query language used in Prometheus to retrieve and manipulate time-series data. With PromQL, users can query the stored metrics, perform calculations, and create visualizations or alerts. PromQL is a powerful and flexible tool for analyzing data, supporting operations like filtering, aggregations, and rate calculations.

Collecting Metrics with Prometheus

Prometheus collects metrics by scraping targets at specified intervals. This section will explain how to define targets in the prometheus.yml configuration file and how to use exporters to expose metrics from different systems (like Node Exporter for Linux system metrics, Blackbox Exporter for probing endpoints, etc.). It will also cover writing custom exporters for your specific applications.

Callback.

In the context of Prometheus, a callback refers to a function or handler that is invoked when Prometheus scrapes a target. This typically applies when metrics are not stored in memory directly but need to be computed or fetched on demand from an external source. The callback allows a target to dynamically return metrics at scrape time. This is common in custom instrumentation where specific logic is run to compute metrics only when needed.

Targets

In Prometheus, "targets" refer to the individual endpoints (typically applications or services) that Prometheus scrapes to collect metrics. These targets are defined in the Prometheus configuration file (prometheus.yml) and usually expose metrics via HTTP on a specific endpoint (e.g., /metrics). Targets are organized into groups and jobs, and Prometheus regularly queries them to fetch up-to-date data. A target could be a Node Exporter running on a server that Prometheus scrapes to gather system metrics.

|

| Prometheus architecture, showing how Prometheus scrapes metrics from various sources, stores them in a time-series database, and provides alerting. |

Installing and Setting Up Prometheus

This section provides a step-by-step guide on how to install Prometheus on Linux based operating system. The goal is to have a working Prometheus instance by the end of this section.

Software/Hardware Description

- Oracle VirtualBox: A powerful x86 and AMD64/Intel64 virtualization product.

- ISO image of a Linux distribution (Ubuntu Budgie 22.04.2 LTS 64bit).

- Memory: 4 GB

- Hard Disk: 60 GB

- CPU 4 CPU

Prometheus Installation

Download site: https://prometheus.io/download/

Downlversion: prometheus-2.54.1.linux-amd64

Run the following command in the terminal:

tar xvfz prometheus-*.tar.gzcd prometheus-*

./prometheus

Launch your browser and navigate to Prometheus page: http://localhost:9090

|

| Prometheus initial page. |

|

| Prometheus catalog of accessible metrics. |

process_resident_memory_bytes

This metric represents the amount of memory in bytes that a process is currently using in RAM (also called "resident memory"). It is a gauge-type metric that provides a real-time snapshot of memory consumption, which can help in identifying memory leaks or understanding the memory footprint of an application.

If you monitor a Prometheus server and see that process_resident_memory_bytes is consistently increasing, it could indicate a memory leak or inefficiency in the application.

|

| Graph of resident process memory in bytes. |

process_cpu_seconds_total

This metric shows the total amount of CPU time (in seconds) consumed by a process. It accumulates over time and represents the CPU usage since the start of the process. This metric is typically used to analyze CPU utilization and is useful when combined with the rate() function to monitor CPU usage over a specified time window.

rate(process_cpu_seconds_total[5m]) calculates the CPU usage rate over the last 5 minutes, giving insight into how intensively the application is using CPU resources.

![rate(process_cpu_seconds_total[5m]) calculates the CPU usage rate over the last 5 minutes rate(process_cpu_seconds_total[5m]) calculates the CPU usage rate over the last 5 minutes](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhD_n5eErGqfJzCdTtLp9v6TPh_SEALnMKd_ZrWlDD3bKnQBS5ETs97egV84abwb9i8Y3GWrH0N-Wqv92nN86Oag6V6H5G5k49tYN6tB9N-fJ7a_UKjAmrtAIyMfAYmb_Ka19Lm0pXXFjvViJCPVUTPjJ-ErYDqOnP6UJcr80gdxVL7bpu8DoYRD7f10ys/w320-h161-rw/cpu.png) |

rate(process_cpu_seconds_total[5m]) calculates the CPU usage rate over the last 5 minutes |

Prometheus can be set up using the XML file: prometheus.yml.

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

Install node exporter

An exporter is a component or service that collects metrics from third-party systems or applications (such as databases, web servers, or hardware devices) and exposes them in a format that Prometheus can scrape. Exporters are commonly used for systems that don't have native Prometheus metric exposure.

Node Exporter Installation

Download version: node_exporter-1.8.2.linux-amd64.tar.gz

Run the following command in the terminal:

tar xvfz node_exporter-*.tar.gzcd node_exporter-*

./node_exporter

Edit the Prometheus configuration file, prometheus.yml, to include the following lines:

- job_name: "node"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9100"]

The entire Prometheus configuration file is:

# my global configglobal:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "node"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9100"]

Reboot Prometheus.

|

| On the Prometheus target page, both "node" and "prometheus" are operational. |

|

Graph depicting the resident memory usage of the node labeled "node." |

|

Setting Up Alerts with Prometheus and Alertmanager

Prometheus provides robust alerting capabilities through its integration with Alertmanager. This section will guide you on how to define alert rules, configure Alertmanager, and route alerts to various channels such as email, Slack, or PagerDuty. We'll also discuss best practices for setting up alerts to avoid alert fatigue.

Alerting rules define conditions in Prometheus that trigger alerts when certain thresholds or patterns are met in the metric data. These rules are written in PromQL and evaluated periodically by Prometheus. If a rule's condition is true, it generates an alert, which is then sent to the Alertmanager for further processing (e.g., routing or notification).

Alertmanager

Alertmanager is a component in the Prometheus ecosystem responsible for handling alerts generated by Prometheus alerting rules. It manages the lifecycle of alerts, including deduplication, grouping, and routing them to various notification channels (e.g., email, Slack, PagerDuty). It also allows users to silence or inhibit alerts under certain conditions (e.g., during maintenance windows).

Alertmanager Installation

Download version: alertmanager-0.27.0.linux-amd64.tar.gz

Run from the command line:

cd alertmanager-*

./alertmanager

Revise the Prometheus configuration file, prometheus.yml, to match the following updated format:

# my global config

global:scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

rule_files: [rules.yml]

alerting:

alertmanagers:

- static_configs:

- targets: [localhost:9093]

# - alertmanager:9093

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "node"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9100"]

Store a file named rules.xml in the prometheus directory:

groups:

- name: test

rules:

- alert: InstanceDown

expr: up == 0

You can customize Alertmanager using the alertmanager.yml file located in the alertmanager directory.

global:smtp_smarthost: 'localhost:25'

smtp_from: 'yourprometheus@text.org'

route:

# When a new group of alerts is created by an incoming alert, wait at

# least 'group_wait' to send the initial notification.

# This way ensures that you get multiple alerts for the same group that start

# firing shortly after another are batched together on the first

# notification.

group_wait: 10s

# When the first notification was sent, wait 'group_interval' to send a batch

# of new alerts that started firing for that group.

group_interval: 5m

# If an alert has successfully been sent, wait 'repeat_interval' to

# resend them.

repeat_interval: 30m

# A default receiver

receiver: "web.hook"

# All the above attributes are inherited by all child routes and can

# overwritten on each.

routes:

- receiver: "web.hook"

group_wait: 30s

match_re:

severity: critical|warning

continue: true

- receiver: "test-email"

group_wait: 10s

match_re:

severity: critical

continue: true

receivers:

- name: 'web.hook'

webhook_configs:

- url: 'http://127.0.0.1:5001/'

- name: 'test-email'

email_configs:

- to: 'yourprometheus@text.org'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

Launch your browser and navigate to Alertmanager page: http://localhost:9093/#/alerts

|

| Launch your browser and navigate to Alertmanager page |

Whenever a target goes down for any reason, the alert manager will send an email notification through the newly configured system.

|

Prometheus alert page indicating that a target has gone offline. |

alerting rules and recording rules

The rules.yml file in Prometheus is used to define alerting rules and recording rules. This file is part of Prometheus’ alerting and monitoring configuration. While it’s typically named rules.yml, you can name it anything, as long as it’s referenced properly in the Prometheus configuration file (prometheus.yml).

Here’s a breakdown of the two types of rules that can be configured in this file:

Alerting Rules

Alerting rules are used to trigger alerts based on conditions evaluated from the metrics collected by Prometheus. When the conditions defined in the rule are met, Prometheus creates alerts, which can be sent to Alertmanager (another Prometheus component) for processing and routing (e.g., sending notifications to email, Slack, PagerDuty, etc.).

Example of an alerting rule in rules.yml:groups:

rules:

- alert: HighCPUUsage

expr: sum(rate(process_cpu_seconds_total[5m])) by (instance) > 0.8

for: 5m

labels:

severity: critical

annotations:

summary: "High CPU usage detected on instance {{ $labels.instance }}"

description: "CPU usage has exceeded 80% for the last 5 minutes on {{ $labels.instance }}."

alert: The name of the alert (e.g., HighCPUUsage).

expr: The PromQL expression that defines the condition for the alert (e.g., CPU usage above 80%).

for: The duration for which the condition must be true before an alert is triggered.

labels: Metadata to help identify and route the alert (e.g., severity level).

annotations: Extra information about the alert, like a summary and a description, which can be displayed in alert notifications.

Recording Rules

Recording rules allow you to precompute and store the results of Prometheus queries. This is useful when you want to run complex queries frequently or across large datasets, as it reduces the computational overhead by precomputing the result and storing it as a new metric.

Example of a recording rule in rules.yml:groups:

rules:

- record: job:http_inprogress_requests:sum

expr: sum by (job) (http_inprogress_requests)

record: The name of the new metric to be recorded (e.g., job:http_inprogress_requests:sum).

expr: The PromQL query to compute the value of the metric (e.g., the sum of in-progress HTTP requests per job).

Structure of rules.yml

The rules in the rules.yml file are grouped together using groups. Each group can contain multiple alerting and recording rules. Grouping rules is useful because Prometheus evaluates the rules in a group together at the same time.

Example structure of rules.yml:groups:

- name: example-group

interval: 30s # Evaluation interval for this group

rules:

- alert: HighMemoryUsage

expr: sum(container_memory_usage_bytes) > 1e+09

for: 5m

labels:

severity: warning

annotations:

summary: "High Memory Usage Detected"

description: "Memory usage is above 1GB for more than 5 minutes."

- record: instance:node_cpu:rate1m

expr: rate(node_cpu_seconds_total[1m])

Visualizing Data with Prometheus and JAVA client library

A client library in Prometheus is a library provided for various programming languages (e.g., Go, Python, Java, Ruby) that allows applications to expose custom metrics to Prometheus. These libraries help developers instrument their code to define, create, and expose various metric types such as counters, gauges, histograms, and summaries. Once metrics are exposed, Prometheus can scrape the application's endpoint to collect the data for monitoring.

In my Java example code, I will demonstrate a straightforward application of Prometheus features.

Setting up maven application workspace

<dependency>

<groupId>io.prometheus</groupId>

<artifactId>simpleclient</artifactId>

<version>0.16.0</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>io.prometheus</groupId>

<artifactId>simpleclient_hotspot</artifactId>

<version>0.16.0</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>io.prometheus</groupId>

<artifactId>simpleclient_httpserver</artifactId>

<version>0.16.0</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

Counter

A counter is a metric that represents a monotonically increasing value that can only go up (or reset to zero). Counters are often used to track events, such as the number of HTTP requests, number of errors, or bytes processed. A counter should never decrease, but it can reset (usually after a service restart). For example, http_requests_total might count the total number of HTTP requests processed by a server.

In the example below, the inc() method is utilized within a loop solely to keep track of the loop's increments.

On the graph page, choose the my_counter_total metric. Clearly, the graph will display a steadily ascending ramp.

Rate

In Prometheus, rate is a function used to calculate the per-second average rate of increase of a given counter over a specified time range. It is most commonly applied to counters, such as the number of requests or errors, and helps in visualizing how fast something is changing over time. For example, using rate(my_counter_total[1m]) calculates the per-second increase of the my_counter counter over the last 1 minute.

Gauge

A gauge is a metric that represents a value that can go up and down over time, as opposed to a counter, which only increases. Gauges are used to track values like current memory usage, CPU utilization, the number of active connections, or the last time a request was processed. For example, a gauge might track the time needed for a loop to complete, where the value can increase or decrease based on the conditions.

In the java example, inProgress is incremented on every loop and last records the ending time for every loop.

Bucket

A bucket refers to a range in which an observation value falls when using a histogram. Buckets allow the histogram to track how many observations fall within certain ranges of values. You can define buckets based on what makes sense for the metric being tracked (e.g., latencies in milliseconds).

For example, you might have buckets for request latencies: [0.1, 0.5, 1, 2, 5] seconds. A request that takes 0.7 seconds would fall into the 1-second bucket. By analyzing bucket counts, you can understand the distribution of latencies (or other measured values).Summary

A summary is a type of metric in Prometheus that captures observations like response sizes or request durations. It can calculate quantiles (like the 50th percentile, 90th percentile, etc.) and also keeps a running total of observations and their sum. Summaries are useful for tracking how long operations take or the size of responses, providing insights into distribution and latency.

Summaries are calculated locally in each instance, making it challenging to aggregate across multiple instances or services.

Histogram

A histogram is a type of metric that measures the distribution of observations (such as request durations or response sizes) by segmenting them into configurable buckets. Unlike summaries, histograms are designed to be aggregatable across multiple instances.

|

| Table of buckets distribution. |

|

| Graph of buckets distribution. |

A quantile is a statistical measure that divides a dataset into equal-sized, consecutive intervals. For example, the 0.5 quantile (also called the median) divides the dataset such that 50% of the data falls below this value, and 50% falls above it. In Prometheus, quantiles are used with histograms to measure response times or latency at different levels (e.g., 90th or 99th percentile), giving you insight into the distribution of a particular metric.

Example: The 99th percentile (0.99 quantile) of request duration tells you that 99% of all requests completed in this amount of time or less, while 1% took longer.

histogram_quantile() is a Prometheus function used to estimate quantiles (e.g., the 90th or 99th percentile) from histogram buckets. It's often used to analyze response time distributions, giving insight into how long requests take at certain percentiles. This function is particularly useful when you want to understand the latency experience of the slowest users. Tracks how many observations fall into each bucket (based on defined thresholds). Histograms can be useful when analyzing latency, response size, and request durations across different services.

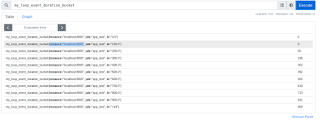

![histogram_quantile(0.95, rate(my_loop_event_duration_bucket[10m])) computes the 95th percentile of loop durations over the last 10 minutes. histogram_quantile(0.95, rate(my_loop_event_duration_bucket[10m])) computes the 95th percentile of loop durations over the last 10 minutes.](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhPkyl1JGUZ56Vi4tqewhHkwThhFuSkwzPuOsXIxEPl7_BnSjfIWC_y_gNCUgeg0gORO4wb8Uh1dhFBzkWSN3DGflvwpeOUav4kOMrun0Mf5c9FfJv6YIRTtTKp77Wj6WseGUIb5aapTB34cEDEVMrjJTQy8g41SgKjgD898EkwwcXTgr3LW5eoAIOXW-8/w320-h159-rw/histogram_qualti.png) |

| histogram_quantile(0.95, rate(my_loop_event_duration_bucket[10m])) computes the 95th percentile of loop durations over the last 10 minutes. |

Visualizing Data with Prometheus and Grafana

While Prometheus comes with a basic web UI for querying and visualizing data, Grafana is often used for more advanced dashboards and visualizations. This section will cover how to integrate Prometheus with Grafana, create custom dashboards, and use Prometheus queries (PromQL) to visualize key metrics.

Grafana download page: https://grafana.com/grafana/download

In the command line, enter:

sudo apt-get install -y adduser libfontconfig1 muslwget https://dl.grafana.com/enterprise/release/grafana-enterprise_11.2.0_amd64.deb

sudo dpkg -i grafana-enterprise_11.2.0_amd64.deb

To start Grafana, enter:

sudo grafana-server -homepath /usr/share/grafana

Steps to Connect Grafana to Prometheus

1. Access the Grafana UI

- Open your web browser and go to your Grafana instance (typically http://localhost:3000 if running locally).

- Log in with your credentials (default username and password are both admin for the first login).

2. Add Prometheus as a Data Source

- Once logged in, on the left sidebar, click on "Connections" and then select "Data Sources."

- Click the "Add Data Source" button.

3. Choose Prometheus

In the list of available data sources, click on "Prometheus."

4. Configure the Prometheus Data Source

- You'll be taken to a settings page to configure the Prometheus data source.

- URL: Enter the URL of your Prometheus server (e.g., http://localhost:9090 or the address of your remote Prometheus instance).

- Access: Set this to "Server" (default) if Grafana and Prometheus are running on the same network or use "Browser" if you want the browser to connect to Prometheus directly.

- Basic Authentication: If your Prometheus instance requires authentication, configure the necessary credentials here.

5. Test the Connection

- Scroll down to the bottom of the page and click "Save & Test."

- If the connection is successful, you'll see a green message that says "Data source is working."

6. Create a New Dashboard

- To create a new dashboard, click on the "+ New Dashboard" button from the left sidebar or go to Dashboards → Manage → New Dashboard.

- Add visualization

- Select Prometheus Data source

|

| Grafana empty panel |

7. Query Prometheus Metrics

- In the "Query" section, select "Prometheus" as the data source (it should already be selected if it's the only data source).

- Select Label filters as job and value app_test (job = app_test).

- Set on Standard options pannel, Unit as bytes.

- Select Run queries.

- You can adjust the visualization type (graph, gauge, table, etc.), time range, and other settings.

- Add more panels as needed to visualize different metrics from Prometheus.

9. Save the Dashboard

Once you're satisfied with the panels, click "Save" in the top-right corner of the screen, give the dashboard a name, and save it for future use.

Conclusion

Prometheus offers a powerful, flexible solution for monitoring and logging in modern IT environments. By following this practical guide, you should be well-equipped to set up Prometheus, collect and visualize metrics, configure alerts, and integrate it with other tools in your observability stack. Whether you're new to Prometheus or looking to enhance your existing setup, this guide serves as a comprehensive resource to achieve your monitoring and logging goals.

References

Prometheus Documentation: The official documentation of Prometheus provides detailed insights into its architecture, setup, and usage. It’s a primary resource for understanding how Prometheus operates and how to use it effectively for monitoring.

Grafana Documentation: This guide from the Grafana website walks you through integrating Grafana with Prometheus to visualize metrics. It’s an essential resource for creating and managing dashboards with Grafana.

"Prometheus: Up & Running" by Brian Brazil: Published by O'Reilly Media, 2018. A comprehensive guide to understanding Prometheus from installation to advanced topics like PromQL and alerting. It’s a great resource for both beginners and advanced users.

Alertmanager Documentation: The official Alertmanager documentation, which covers how to configure and use Alertmanager for handling alerts generated by Prometheus.

Your Feedback Matters!

Have ideas or suggestions? Follow the blog and share your thoughts in the comments.

About Me

I am passionate about IT technologies. If you’re interested in learning more or staying updated with my latest articles, feel free to connect with me on:

Feel free to reach out through any of these platforms if you have any questions!

Comments

Post a Comment